If you have ever worked with Docker on MAC (or Windows without a recent linux subsystem), you know that working can be really, really slow … at least if any significant I/O is involved.

So, if you mount lots of files as a Docker volume, you *will* always get problems, period.

Docker Sync etc. may help a bit, but usually that won’t be enough… and it adds additional complexity and problems.

Since Shopware 6 currently also mounts everything as a volume if you use Docker, the load times of the shop are pretty much horrible on MAC, something around 40 seconds (!) per page on first load.

Shopware is working on the Docker setup to improve it though, but here is a little workaround until that is available.

The trick is to only mount the folders you really need locally to work with, e.g. „custom“ (plugins) and „public“ (assets) folders into the container, instead of the complete „app“ folder.

The problem is, inside the container, you still need the other files and folders to have a working system.

So currently, the only solution is to clone the full GIT repo locally (on the host), adjust the standard docker-compose.yml file (change the volumes), lauch the containers and then, inside the container, basically clone everything again – so that is exists there, but is not mounted/connected to the „outside“ file system.

Here are the steps involved!

git clone https://github.com/shopware/development.git shopware_development cd shopware_development # optionally, clone platform, too git clone [email protected]:shopware/platform.git

in docker-compose.yml, change

volumes: - .:/app - ~/.composer:/.composer

to

volumes: - ./custom:/app/custom - ./public:/app/public - ~/.npm:/.npm - ~/.composer:/.composer

Not sure anymore why I’ve added .npm here, might not be necessary 🙂

Now start the Docker containers with:

./psh.phar docker:start

Login as root to adjust folder rights…

./psh.phar docker:ssh-root chown -R 501:dialout /app exit

To make sure Mysql is still running, run „docker-compose up -d“ (or ./psh.phar docker:start)

Now go into the container again, this time as a regular user and basically clone the repo again – I’ve experimented with GIT „init + remote add … + reset –hard“ here to possibly keep the 2 mounted folders, but it wouldn’t let me clone then or throw some permission errors, so in the end I’ve deleted them:

./psh.phar docker:ssh # inside the "app" folder here, there are only "public" and "custom" # so we have to remove them and clone the complete repo again, inside the container! rm -Rf custom public # may give docker warnings like "device busy", but should work ... git clone https://github.com/shopware/development.git .

# optionally, clone platform git clone https://github.com/shopware/platform.git

Then, still inside the container (!) run the Shopware installation all at once (may break because mysql container exits)

./psh.phar install

OR, execute the single steps, also inside the container (recommended as of now):

./psh.phar init ./psh.phar demo-data ./psh.phar storefront:init ./psh.phar administration:init

If the mysql container exits (that may happen a couple of times during the command executions), run „docker-compose up -d“ (or ./psh.phar docker:start) in another shell to start it again

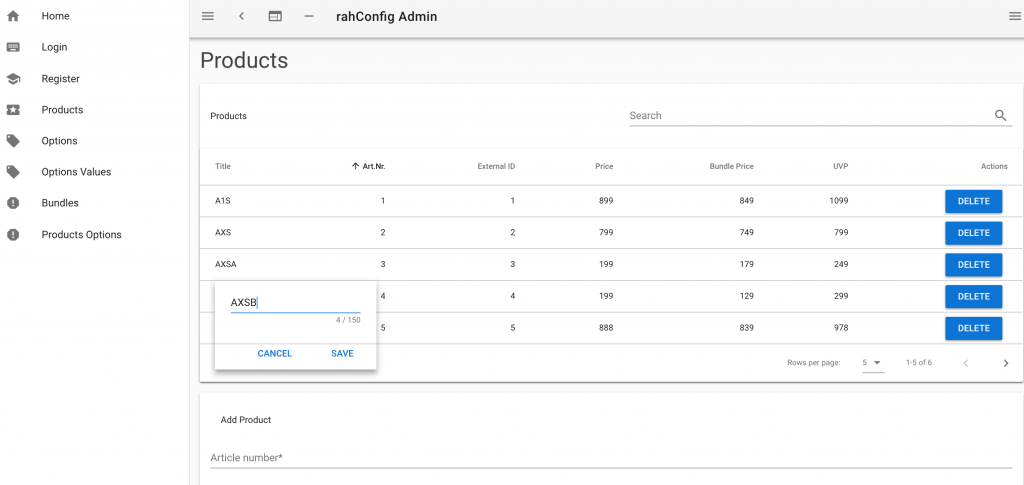

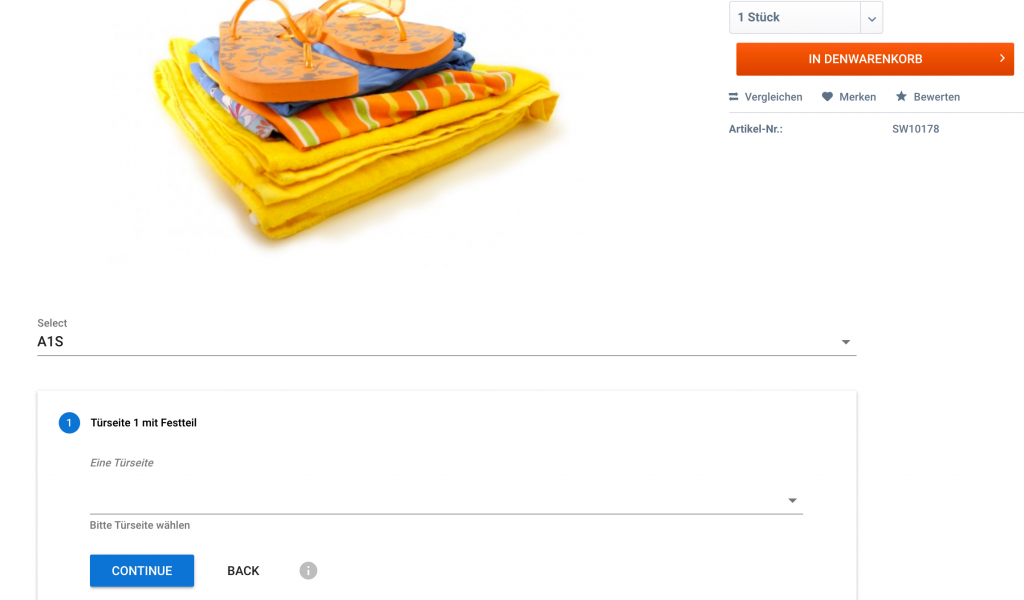

Finally, open localhost:8000/ 🙂 It should look something like this now on your MAC: